Install ThoughtSpot clusters on the SMC appliance

Install your clusters on the SMC appliance using the ThoughtSpot software release bundle. Installation takes approximately one hour. Make sure you can connect to ThoughtSpot remotely. You may be able to run the installer on your local computer.

| ThoughtSpot has stopped selling and renewing hardware appliance contracts. See CentOS end-of-life announcement for more information, and alternative deployment options. |

ThoughtSpot Training

For best results when installing ThoughtSpot on the SMC appliance, we recommend that you take the following ThoughtSpot U course: Create a Cluster.

See other training resources at ThoughtSpot U.

Refer to your welcome letter from ThoughtSpot to find the link to download the release bundle. If you haven’t received a link to download the release bundle, open a support ticket with ThoughtSpot support.

❏ |

|

❏ |

|

❏ |

Step 1. Run the Installer

-

Copy the downloaded release bundle to

/export/sdb1/TS_TASKS/install. Runscp <release-number>.tar.gz admin@<hostname>:/export/sdb1/TS_TASKS/install/<file-name>.Note the following parameters:

release-number-

is the release number of your ThoughtSpot installation, such as

6.0,5.3,5.3.1, and so on. hostname-

is your specific hostname.

file-name-

is the name of the tarball file on your local machine.

$ scp <release-number>.tar.gz admin@<hostname>:/export/sdb1/TS_TASKS/install/<file-name>

You can use another secure copy method, if you prefer a method other than the scpcommand. -

Alternatively, use

tscli fileserver download-releaseto download the release bundle.You must configure the fileserver by running

tscli fileserver configurebefore you can download the release.$ tscli fileserver download-release <release-number> --user <username> --out <release-location>Note the following parameters:

release-number-

is the release number of your ThoughtSpot instance, such as 5.3, 5.3.1, 6.0, and so on.

username-

is the username for the fileserver that you set up earlier, when configuring the fileserver.

release-location-

is the location path of the release bundle on your local machine. For example,

/export/sdb1/TS_TASKS/install/6.0.tar.gz.

-

Verify the checksum to ensure you have the correct release.

Run

md5sum -c <release-number>.tar.gz.MD5checksum.$ md5sum -c <release-number>.tar.gz.MD5checksumYour output says

okif you have the correct release. -

Launch a screen session. Use

screento ensure that your installation doesn’t stop if you lose network connectivity.$ screen -S DEPLOYMENT -

Take a machine snapshot prior to the release deployment, as a best practice.

-

Create the cluster. Run:

$ tscli cluster create <release-number>.tar.gz -

Edit the output using your specific cluster information. For more information on this process, refer to Using the tscli cluster create command and Parameters of the

cluster createcommand. -

The cluster installer automatically reboots all the nodes after the install. Wait at least 15 minutes for the installation process to complete. The system is rebooting, which takes a few minutes.

Sign in to any node to check the current cluster status by running this command:

tscli cluster status

Step 2. Check cluster health

After you install the cluster, check its status using the tscli cluster status and tscli cluster check commands.

$ tscli cluster status

Cluster: RUNNING

Cluster name : thoughtspot

Cluster id : 1234X11111

Number of nodes : 3

Release : 6.0

Last update = Wed Oct 16 02:24:18 2019

Heterogeneous Cluster : False

Storage Type : HDFS

Database: READY

Number of tables in READY state: 2185

Number of tables in OFFLINE state: 0

Number of tables in INPROGRESS state: 0

Number of tables in STALE state: 0

Number of tables in ERROR state: 0

Search Engine: READY

Has pending tables. Pending time = 1601679ms

Number of tables in KNOWN_TABLES state: 1934

Number of tables in READY state: 1928

Number of tables in WILL_REMOVE state: 0

Number of tables in BUILDING_AND_NOT_SERVING state: 0

Number of tables in BUILDING_AND_SERVING state: 128

Number of tables in WILL_NOT_INDEX state: 0Ensure that the cluster is RUNNING and that the Database and Search Engine are READY.

Your output may look something like the following listing.

Ensure that all diagnostics show SUCCESS.

If tscli cluster check returns an error, it may suggest you run tscli storage gc to resolve the issue.

If you run tscli storage gc, note that it restarts your cluster.

|

$ tscli cluster check

Connecting to hosts...

[Wed Jan 8 23:15:47 2020] START Diagnosing ssh

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing connection

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing zookeeper

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing sage

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing timezone

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing disk

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing cassandra

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing hdfs

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing orion-oreo

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing memcheck

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing ntp

[Wed Jan 8 23:16:08 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:08 2020] START Diagnosing trace_vault

[Wed Jan 8 23:16:09 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:09 2020] START Diagnosing postgres

[Wed Jan 8 23:16:11 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:11 2020] START Diagnosing disk-health

[Wed Jan 8 23:16:11 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:11 2020] START Diagnosing falcon

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:12 2020] START Diagnosing orion-cgroups

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:12 2020] START Diagnosing callosum

/usr/lib/python2.7/site-packages/urllib3/connectionpool.py:852: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/latest/advanced-usage.html#ssl-warnings

InsecureRequestWarning)

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################Step 3: Finalize installation

After the cluster status changes to “Ready,” sign in to the ThoughtSpot application on your browser.

Follow these steps:

-

Start a browser from your computer.

-

Enter your secure IP information on the address line.

https://<IP-address> -

If you don’t have a security certificate for ThoughtSpot, you must bypass the security warning to proceed:

-

Select Advanced

-

Select Proceed

-

-

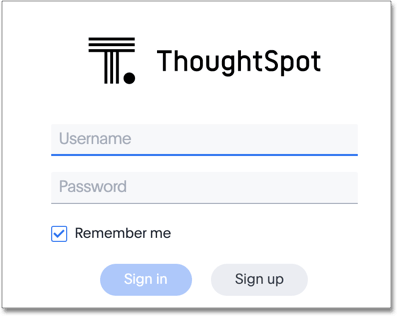

The ThoughtSpot sign-in page appears.

-

In the ThoughtSpot sign-in window, enter admin credentials, and select Sign in. If you don’t know the admin credentials, ask your network administrator. ThoughtSpot recommends changing the default admin password.

Lean configuration

For use with thin provisioning only: If you have a small or medium instance type, with less than 100GB of data, you must use advanced lean configuration before loading any data into ThoughtSpot. After installing the cluster, contact ThoughtSpot support for assistance with this configuration.

Error recovery

Set-config error recovery

If you get a warning about node detection when you run the set-config command, restart the node-scout service.

Your error may look something like the following:

Connecting to local node-scout WARNING: Detected 0 nodes, but found configuration for only 1 nodes.

Continuing anyway. Error in cluster config validation: [] is not a valid link-local IPv6 address for node: 0e:86:e2:23:8f:76 Configuration failed.

Please retry or contact support.Restart the node-scout service with the following command.

$ sudo systemctl restart node-scoutEnsure that you restarted the node-scout by running sudo systemctl status node-scout.

Your output should specify that the node-scout service is active.

It may look something like the following:

$ sudo systemctl status node-scout

● node-scout.service - Setup Node Scout service

Loaded: loaded (/etc/systemd/system/node-scout.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-12-06 13:56:29 PST; 4s agoNext, retry the set-config command.

$ cat nodes.config | tscli cluster set-configThe command output should no longer have a warning.

Related information