Deploy ThoughtSpot clusters on your own OS

After you configure and run the ansible playbook, you are ready to deploy ThoughtSpot clusters.

| If your organization requires that privilege escalation take place through an external tool that integrates with ansible, refer to Manage cluster operations on ansible for clusters that use RHEL. |

Prerequisites

Ensure that you have completed the following prerequisites:

-

Review the required network ports for operation of ThoughtSpot, and ensure that the required ports are open and available.

-

Prerequisites for deploying ThoughtSpot on your own OS, including setting up hosts. Note that the configuration requirements for the hosts depend on the platform type: AWS, GCP, and so on.

-

Download the ThoughtSpot artifacts for deploment.

-

Run the offline script, if deploying offline.

Prepare disks

After the ansible playbook finishes, run the prepare_disks script on every node. You must run this script with elevated privileges, either with sudo or as a root user. Specify the data drives by adding the full device path for all data drives, such as /dev/sdc, after the script name. Separate data drives with a space.

Run the prepare_disks script, either with sudo or as a root user:

Install ThoughtSpot clusters

After you complete the prerequisites and run prepare_disks, you are ready to install ThoughtSpot clusters.

-

Launch a screen session. Use screen to ensure that your installation does not stop if you lose network connectivity.

$ screen -S DEPLOYMENT -

Take a machine snapshot prior to the release deployment, as a best practice.

-

Create the cluster by running the

tscli cluster createcommand.If you are using an s3 or gcs bucket for object storage, add the flag

--enable_cloud_storage=s3aor--enable_cloud_storage=gcsto the end of the command.$ tscli cluster create <release-number>.tar.gz -

Edit the output with your specific cluster information. Fill out the cluster name, cluster ID, email alert preferences, and the node IPs at the prompts specified. Don’t edit any other sections of the installer script.

- Cluster Name

-

Name your cluster based on the ThoughtSpot naming convention, in the form company-clustertype-location-clusternumber. For example, ThoughtSpot-prod-Sunnyvale-12.

- Cluster ID

-

Enter the ID of your cluster that ThoughtSpot support provided for you. Open a ticket with ThoughtSpot Support if you do not have an ID.

- Host IPs

-

Enter the IP addresses of all cluster hosts, in the form

000.000.000.000. For example, 192.168.7.70. Use spaces instead of commas to separate multiple IP addresses. - Email alerts

-

Enter the email addresses you would like to receive alerts about this cluster, in the form [email protected]. The address [email protected] appears automatically and should remain, so that ThoughtSpot can be aware of the status of your cluster. Separate email addresses using a space.

Check cluster health

After the cluster installs, check its status using the tscli cluster status and tscli cluster check commands.

Your output may look similar to the following example. Ensure that the cluster is RUNNING and that the Database and Search Engine are READY.

$ tscli cluster status

Cluster: RUNNING

Cluster name : thoughtspot

Cluster id : 1234X11111

Number of nodes : 3

Release : 6.0

Last update = Wed Oct 16 02:24:18 2019

Heterogeneous Cluster : False

Storage Type : HDFS

Database: READY

Number of tables in READY state: 2185

Number of tables in OFFLINE state: 0

Number of tables in INPROGRESS state: 0

Number of tables in STALE state: 0

Number of tables in ERROR state: 0

Search Engine: READY

Has pending tables. Pending time = 1601679ms

Number of tables in KNOWN_TABLES state: 1934

Number of tables in READY state: 1928

Number of tables in WILL_REMOVE state: 0

Number of tables in BUILDING_AND_NOT_SERVING state: 0

Number of tables in BUILDING_AND_SERVING state: 128

Number of tables in WILL_NOT_INDEX state: 0Your output for tscli cluster check may look similar to the following example.

Ensure that all diagnostics show SUCCESS.

$ tscli cluster check

Connecting to hosts...

[Wed Jan 8 23:15:47 2020] START Diagnosing ssh

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing connection

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing zookeeper

[Wed Jan 8 23:15:47 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:47 2020] START Diagnosing sage

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing timezone

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing disk

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing cassandra

[Wed Jan 8 23:15:48 2020] SUCCESS

################################################################################

[Wed Jan 8 23:15:48 2020] START Diagnosing hdfs

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing orion-oreo

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing memcheck

[Wed Jan 8 23:16:02 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:02 2020] START Diagnosing ntp

[Wed Jan 8 23:16:08 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:08 2020] START Diagnosing trace_vault

[Wed Jan 8 23:16:09 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:09 2020] START Diagnosing postgres

[Wed Jan 8 23:16:11 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:11 2020] START Diagnosing disk-health

[Wed Jan 8 23:16:11 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:11 2020] START Diagnosing falcon

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:12 2020] START Diagnosing orion-cgroups

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################

[Wed Jan 8 23:16:12 2020] START Diagnosing callosum

/usr/lib/python2.7/site-packages/urllib3/connectionpool.py:852: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/latest/advanced-usage.html#ssl-warnings

InsecureRequestWarning)

[Wed Jan 8 23:16:12 2020] SUCCESS

################################################################################

If tscli cluster check returns an error, it may suggest you run tscli storage gc to resolve the issue.

If you run tscli storage gc, note that it restarts your cluster.

|

Prepare services

This additional service configuration is only required if you defined the remote_user_management parameter in the Ansible playbook and used your LDAP or Active Directory service account for installation.

Ensure that the following three services only start after the service you use for LDAP/AD integration: nginx, cgconfig, cgroup-init.

If you did not define the remote_user_management parameter, and used a local user for installation (the default), skip the following configuration. Move on to Finalize installation.

The service you use for LDAP/AD integration can vary. In this example, we use the sssd service. To ensure that nginx, cgconfig, and cgroup-init start at the correct time, follow these steps:

-

Open the

systemdconfig for each service:nginx,cgconfig, andcgroup-init. -

Add the following line to the config for each of the 3 services, replacing

<service-name>with the name of the service you use for LDAP/AD integration:After=<service-name>.serviceFor example, if you use the

sssdservice, add the following line to each config:After=sssd.service -

If the

systemdconfig for any of the three services already has anAfterline, append the LDAP/ AD service with a space:After=syslog.target sssd.service -

Reload the services by running the following command:

sudo systemctl daemon-reload

Finalize installation

After the cluster status changes to READY, sign in to ThoughtSpot on your browser.

Follow these steps:

-

Start a browser from your computer.

-

Enter your secure IP information on the address line.

https://<VM-IP> -

If you don’t have a security certificate for ThoughtSpot, you must bypass the security warning:

Select Advanced.

Select Proceed.

-

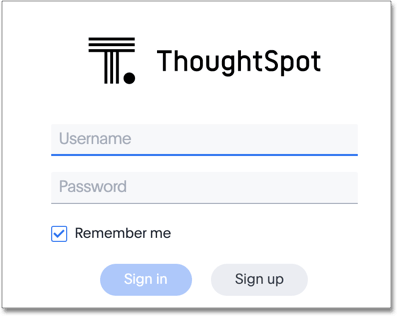

The ThoughtSpot sign-in page appears.

-

In the ThoughtSpot sign-in window, enter admin credentials, and select Sign in.

ThoughtSpot recommends changing the default admin password.

Lean configuration

For use with thin provisioning only: If you have a small or medium instance type, with less than 100GB of data, you must use advanced lean configuration before loading any data into ThoughtSpot. After installing the cluster, configure advanced lean mode.

To configure advanced lean mode, do the following:

-

SSH as admin into your ThoughtSpot cluster, using the following syntax:

ssh admin@<cluster-ip-address or hostname> -

Run the advanced lean mode configuration using the following syntax:

tscli config-mode lean [-h] --type {small,medium,default}Examples:

-

To configure your instance with the "small" data size, run:

tscli config-mode lean [-h] --type small -

To configure your instance with the "medium" data size, run:

tscli config-mode lean [-h] --type medium

If you decide later you want to disable advanced lean mode, use default. -

Related information