Sync data from ThoughtSpot to Google BigQuery

Sync to Google BigQuery from an Answer

To create a sync to Google BigQuery from an Answer, follow these steps:

-

Select the desired Answer from the Answers page or the ThoughtSpot home page. You must have Can manage sync permissions and view access to an Answer to create a sync.

-

In the upper-right corner of the Answer, click the more options menu icon

. From the dropdown menu, select Sync to other apps, then choose BigQuery and click Next.

. From the dropdown menu, select Sync to other apps, then choose BigQuery and click Next. -

From the Choose an existing connection window, select your data warehouse and click Allow. If you do not have an existing connection to Google BigQuery, click Select here under Data warehouse and select Add new connection.

The Create connection page appears. For more information on creating a connection to Google Bigquery, see Add a Google BigQuery connection.

Once you create your connection, you are directed back to the Sync page.

-

Within ThoughtSpot, fill in the following parameters:

-

Edit the Pipeline name if needed. By default, this field populates with PL-[Answer Name].

-

Select the Source.

-

Select the Destination from the dropdown menu.

-

Select the Project from the dropdown menu.

-

Select the Dataset from the dropdown menu.

-

Select the Table from the dropdown menu.

-

Select the Operation from the dropdown menu. You can choose between Insert, Update, Upsert, and Delete.

-

Map the Source and Destination columns from the dropdown menus provided. Note that the Source column refers to the column in ThoughtSpot, while the Destination column refers to the column in Google BigQuery.

If you select Upsert as the operation, the External ID option appears as well, to the left of the Source and Destination columns. This option is only clickable if the Destination column is unique (for example, ID). For the external ID column, the source column values will be looked up against the destination column values. For matches on that column, the existing records in Google BigQuery will be updated with the new source columns while records that don’t exist yet will be created and populated using the source column data.

-

-

By default, "Save and sync" is selected. Select Save to send your data to Google BigQuery. Your data immediately appears in Google BigQuery.

-

[Optional] To set up a repeated sync, click Schedule your sync and select your timezone. From the options provided, choose whether the sync will occur every:

-

n minutes. You can choose to schedule a sync every 5, 10, 15, 20, 30, or 45 minutes.

-

n hours.

-

n days at a selected time. Note that you can choose not to send an update on weekends.

-

week at a selected time and day.

-

n months at a selected time and date.

-

| Any sync over 50,000 rows may result in an execution timeout. For optimal performance, keep your sync to below 50,000 rows. If you’re syncing a large number of rows and the sync fails, try applying filters like date filters to make your dataset smaller and then sync. |

Sync to Google BigQuery from a Custom SQL View

To sync to Google BigQuery from a custom SQL view, follow these steps:

-

Navigate to your SQL view by selecting the Data workspace tab and searching from the Data workspace home page. Select the SQL view name.

-

In the upper-right corner, click the more options menu icon

and select Sync to Google BigQuery.

and select Sync to Google BigQuery. -

From the Choose an existing connection window, select your data warehouse and click Allow. If you do not have an existing connection to Google BigQuery, click Select here under Data warehouse and select Add new connection.

The Create connection page appears. For more information on creating a connection to Google Bigquery, see Add a Google BigQuery connection.

Once you create your connection, you are directed back to the Sync page.

-

Fill in the following parameters:

-

Edit the Pipeline name if needed. By default, this field populates with PL-[Answer Name].

-

Select the Source.

-

Select the Destination from the dropdown menu.

-

Select the Project from the dropdown menu.

-

Select the Dataset from the dropdown menu.

-

Select the Table from the dropdown menu.

-

Select the Operation from the dropdown menu. You can choose between Insert, Update, Upsert, and Delete.

-

Map the Source and Destination columns from the dropdown menus provided.

If you select Upsert as the operation, the External ID option appears as well, to the left of the Source and Destination columns. This option is only clickable if the Destination column is unique (for example, ID). For the external ID column, the source column values will be looked up against the destination column values. For matches on that column, the existing records in Google BigQuery will be updated with the new source columns while records that don’t exist yet will be created and populated using the source column data.

-

-

By default, "Sync and save" is selected. Select Save to send your data to Google BigQuery. Your data immediately appears in Google BigQuery.

-

[Optional] To set up a repeated sync, click Schedule your sync and select your timezone. From the options provided, choose whether the sync will occur every:

-

n minutes. You can choose to schedule a sync every 5, 10, 15, 20, 30, or 45 minutes.

-

n hours.

-

n days at a selected time. Note that you can choose not to send an update on weekends.

-

week at a selected time and day.

-

n months at a selected time and date.

-

| Any sync over 50,000 rows may result in an execution timeout. For optimal performance, keep your sync to below 50,000 rows. If you’re syncing a large number of rows and the sync fails, try applying filters like date filters to make your dataset smaller and then sync. |

Failure to sync

A sync to Google BigQuery can fail due to multiple reasons. If you experience a sync failure, consider the following causes:

-

The underlying ThoughtSpot object was deleted.

-

The underlying Google BigQuery object was deleted.

-

The column name was changed in either ThoughtSpot or Google BigQuery, making it different to the column name setup in the mapping.

-

There are data validation rules in Google BigQuery which only allow data with only a certain data type to be populated in the Google BigQuery fields, but the columns being mapped onto Google BigQuery from ThoughtSpot do not have the same or allowable data type.

-

There is a mandatory field in Google BigQuery which has not been mapped onto as a destination column when setting up the mapping in ThoughtSpot.

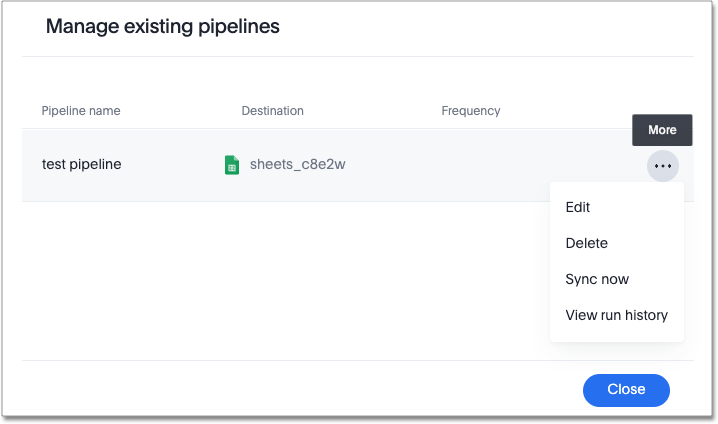

Manage pipelines

While you can also manage a pipeline from the Pipelines tab in the Data workspace, accessing the Manage pipelines option from an Answer or view displays all pipelines local to that specific data object. To manage a pipeline from an Answer or view, follow these steps:

-

Click the more options menu icon

and select Manage pipelines.

and select Manage pipelines. -

Scroll to the name of your pipeline from the list that appears. Next to the pipeline name, select the more options icon

. From the list that appears, select:

. From the list that appears, select:-

Edit to edit the pipeline’s properties. For example, for a pipeline to Google Sheets, you can edit the pipeline name, file name, sheet name, or cell number. Note that you cannot edit the source or destination of a pipeline.

-

Delete to permanently delete the pipeline.

-

Sync now to sync your Answer or view to the designated destination.

-

View run history to see the pipeline’s Activity log in the Data workspace.

-

Related information