Snowflake Performance and Consumption SpotApp

SpotApps are ThoughtSpot’s out-of-the-box solution templates built for specific use cases and data sources. They are built on ThoughtSpot Modeling Language (TML) Blocks, which are pre-built pieces of code that are easy to download and implement directly from the product.

The Snowflake Performance and Consumption SpotApp mimics the Snowflake data model. When you deploy it, ThoughtSpot creates several Worksheets, Answers, and Liveboards, based on your Snowflake data in your cloud data warehouse.

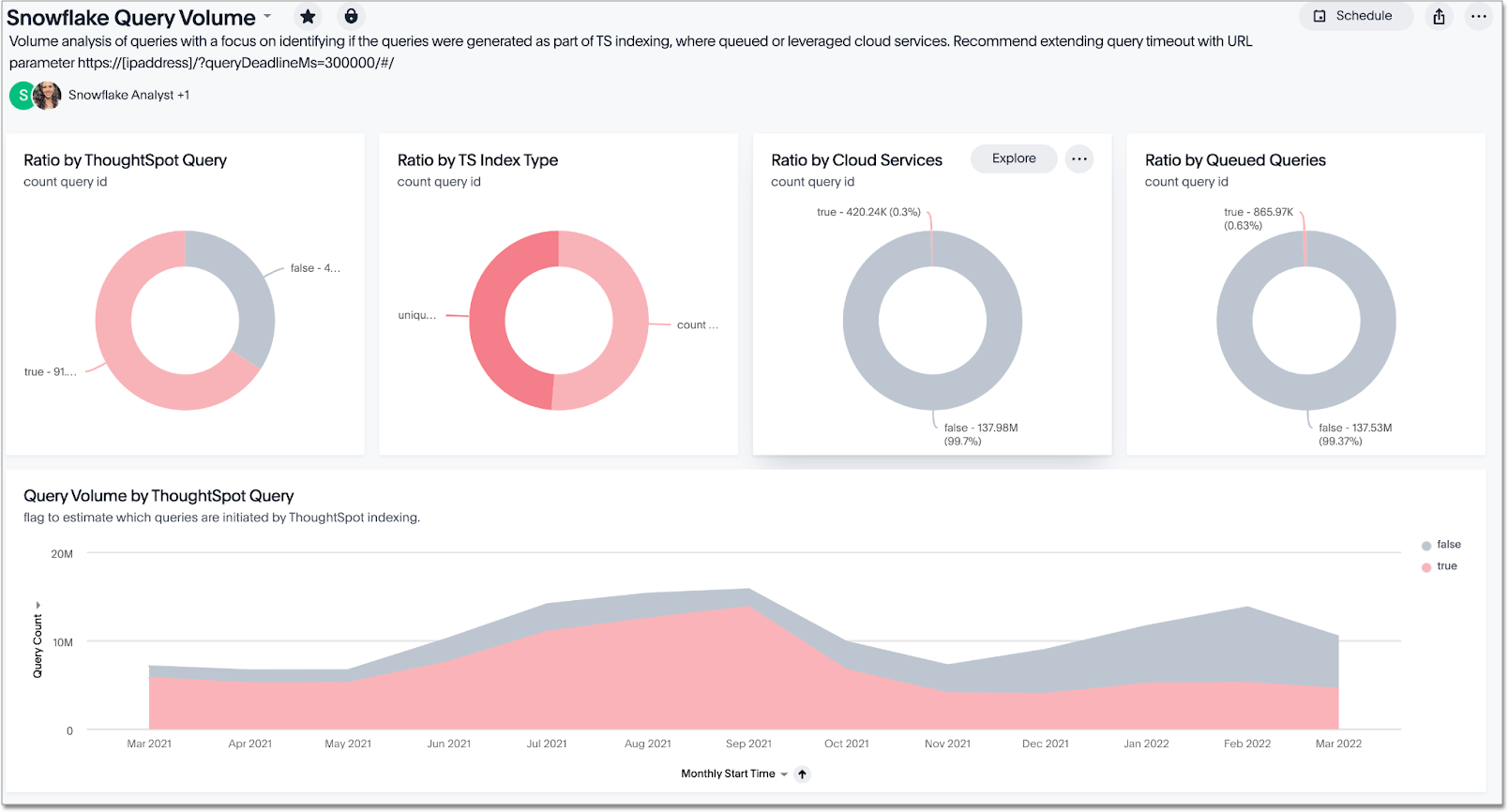

This is a sample Liveboard, created after you deploy the Snowflake Performance and Consumption SpotApp:

Use the Snowflake Performance and Consumption SpotApp to manage Snowflake costs and investigate query performance. Track how and where your users consume credits, and triage and resolve any performance bottlenecks.

Prerequisites

Before you can deploy the Snowflake Performance and Consumption SpotApp, you must complete the following prerequisites:

-

Review the required tables and columns for the SpotApp.

-

Ensure that your columns match the required column type listed in the schema for your SpotApp.

-

Sync all tables and columns from Snowflake to your cloud data warehouse. You can sync only the required tables and columns, but ThoughtSpot recommends syncing all tables and columns from Snowflake to your CDW. The columns can be Snowflake’s out-of-the-box columns, or any custom columns that you are using instead of the out-of-the-box columns.

If you are using an ETL/ELT tool or working with another team in your organization to move data, our recommendation is that you sync all columns from the tables listed in the SpotApp. -

Obtain credentials and SYSADMIN privileges to connect to your Snowflake environment. Your Snowflake environment must contain the data you would like ThoughtSpot to use to create Answers, Liveboards, and Worksheets. Refer to the connection reference for Snowflake for information about required credentials.

-

The connection name for each new SpotApp must be unique.

-

ACCOUNTADMINaccess to Snowflake. -

Access to the SNOWFLAKE database in your Snowflake environment.

-

Access to the following Snowflake tables in your Snowflake environment. Refer to Snowflake Performance and Consumption SpotApp schema for more details.

-

DATABASE_STORAGE_USAGE_HISTORY

-

QUERY_HISTORY

-

STAGES

-

STORAGE_USAGE

-

WAREHOUSE_METERING_HISTORY

-

-

Run the required SQL commands in your cloud data warehouse. Refer to Run SQL commands. Note that you have two options to set up the SpotApp; only choose one of the options.

Run SQL commands

You can set up the SpotApp in one of two ways:

-

Option 1: Copy the data from the SNOWFLAKE database to a different database using Snowflake tasks. This option has faster performance and a customizable cost. Refer to Option 1 SQL commands.

-

Option 2: Query on the system database directly. This option has slower performance and may be more expensive. Refer to Option 2 SQL commands.

Option 1

The following SQL commands grant permission for the role you use in your Snowflake connection to use the Snowflake database. They create a separate database and schema for the data in the Snowflake database, and create the tables with the data. Then, they grant permission for the role you use in your Snowflake connection to use the Snowflake database. Replace <YOUR_ROLE> and <YOUR_WAREHOUSE> with your specific information. The role should be either ACCOUNTADMIN or a custom SpotApps role. You can also modify the schedule. The following commands set the schedule to refresh the table data monthly. Changing the schedule may have performance and cost implications.

| ThoughtSpot recommends you create the database and schema with the suggested names, using the first two commands in the SQL script. However, you can also use your own names. If you use different names, you must replace ThoughtSpot’s suggested names with the names you used. |

Run these commands as the ACCOUNTADMIN. If you don’t have account admin access, you can create a custom role with the permissions required to execute tasks. See the Snowflake documentation.

Make sure to be consistent in your SQL script. If you use double quotes as object identifiers for one object, you must use double quotes for all objects. If you run all the commands at once, use semicolons to separate the commands.

SQL commands:

Open the dropdown menu to view the SQL commands.

CREATE DATABASE "SNOWFLAKE_USAGE_TS";

CREATE SCHEMA “SNOWFLAKE_USAGE_TS”."SNOWFLAKE";

CREATE OR REPLACE TASK UPDATE_QUERY_HISTORY

WAREHOUSE = <YOUR_WAREHOUSE>

SCHEDULE = 'USING CRON 0 0 1 * * UTC'

AS CREATE OR REPLACE TABLE "SNOWFLAKE_USAGE_TS"."SNOWFLAKE"."QUERY_HISTORY" AS (SELECT

"QUERY_ID",

"QUERY_TEXT",

"DATABASE_ID",

"DATABASE_NAME",

"SCHEMA_ID",

"SCHEMA_NAME",

"QUERY_TYPE",

"SESSION_ID",

"USER_NAME",

"ROLE_NAME",

"WAREHOUSE_ID",

"WAREHOUSE_NAME",

"WAREHOUSE_SIZE",

"WAREHOUSE_TYPE",

"QUERY_TAG",

"EXECUTION_STATUS",

"ERROR_CODE",

"ERROR_MESSAGE",

"START_TIME",

"ROWS_PRODUCED",

"PARTITIONS_SCANNED",

"PARTITIONS_TOTAL",

"PERCENTAGE_SCANNED_FROM_CACHE",

"BYTES_SPILLED_TO_LOCAL_STORAGE",

"BYTES_SPILLED_TO_REMOTE_STORAGE",

"QUEUED_REPAIR_TIME",

"QUEUED_OVERLOAD_TIME",

"QUEUED_PROVISIONING_TIME",

"TOTAL_ELAPSED_TIME",

"COMPILATION_TIME",

"EXECUTION_TIME" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."QUERY_HISTORY");

CREATE OR REPLACE TASK UPDATE_WAREHOUSE_METERING_HISTORY

WAREHOUSE = <YOUR_WAREHOUSE>

SCHEDULE = 'USING CRON 0 0 1 * * UTC'

AS CREATE OR REPLACE TABLE "SNOWFLAKE_USAGE_TS"."SNOWFLAKE"."WAREHOUSE_METERING_HISTORY" AS (SELECT

"WAREHOUSE_ID",

"WAREHOUSE_NAME",

"START_TIME",

"CREDITS_USED",

"CREDITS_USED_COMPUTE",

"CREDITS_USED_CLOUD_SERVICES",

"END_TIME" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_METERING_HISTORY");

CREATE OR REPLACE TASK UPDATE_STAGES

WAREHOUSE = <YOUR_WAREHOUSE>

SCHEDULE = 'USING CRON 0 0 1 * * UTC'

AS CREATE OR REPLACE TABLE "SNOWFLAKE_USAGE_TS"."SNOWFLAKE"."STAGES" AS (SELECT

"STAGE_ID",

"STAGE_NAME",

"STAGE_SCHEMA_ID",

"STAGE_SCHEMA",

"STAGE_CATALOG_ID",

"STAGE_CATALOG",

"STAGE_TYPE",

"STAGE_OWNER",

"DELETED" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STAGES");

CREATE OR REPLACE TASK UPDATE_STORAGE_USAGE

WAREHOUSE = <YOUR_WAREHOUSE>

SCHEDULE = 'USING CRON 0 0 1 * * UTC'

AS CREATE OR REPLACE TABLE "SNOWFLAKE_USAGE_TS"."SNOWFLAKE"."STORAGE_USAGE" AS (SELECT

"USAGE_DATE",

"FAILSAFE_BYTES",

"STAGE_BYTES",

"STORAGE_BYTES" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STORAGE_USAGE");

CREATE OR REPLACE TASK UPDATE_DATABASE_STORAGE_USAGE_HISTORY

WAREHOUSE = <YOUR_WAREHOUSE>

SCHEDULE = 'USING CRON 0 0 1 * * UTC'

AS CREATE OR REPLACE TABLE "SNOWFLAKE_USAGE_TS"."SNOWFLAKE"."DATABASE_STORAGE_USAGE_HISTORY" AS (SELECT

"USAGE_DATE",

"DATABASE_ID",

"DATABASE_NAME",

"DELETED",

"AVERAGE_DATABASE_BYTES",

"AVERAGE_FAILSAFE_BYTES" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."DATABASE_STORAGE_USAGE_HISTORY");

alter task UPDATE_DATABASE_STORAGE_USAGE_HISTORY RESUME;

alter task UPDATE_STORAGE_USAGE RESUME;

alter task UPDATE_STAGES RESUME;

alter task UPDATE_WAREHOUSE_METERING_HISTORY RESUME;

alter task UPDATE_QUERY_HISTORY RESUME;

execute task UPDATE_DATABASE_STORAGE_USAGE_HISTORY;

execute task UPDATE_STORAGE_USAGE;

execute task UPDATE_STAGES;

execute task UPDATE_WAREHOUSE_METERING_HISTORY;

execute task UPDATE_QUERY_HISTORY;

GRANT USAGE ON DATABASE "SNOWFLAKE_USAGE_TS" TO ROLE <YOUR_ROLE>;

GRANT USAGE ON SCHEMA "SNOWFLAKE_USAGE_TS"."SNOWFLAKE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON ALL TABLES IN SCHEMA "SNOWFLAKE_USAGE_TS"."SNOWFLAKE" TO ROLE <YOUR_ROLE>;;Option 2

The following SQL commands grant permission for the role you use in your Snowflake connection to use the Snowflake database. They prepare you to query on the system database directly. Replace YOUR_ROLE with your specific information, either the ACCOUNTADMIN or a custom SpotApps role.

Run these commands as the ACCOUNTADMIN. If you don’t have account admin access, you can create a custom role with the permissions required to execute tasks. See the Snowflake documentation.

Make sure to be consistent in your SQL script. If you use double quotes as object identifiers for one object, you must use double quotes for all objects. If you run all the commands at once, use semicolons to separate the commands.

SQL commands:

Open the dropdown to view the SQL commands.

GRANT USAGE ON DATABASE "SNOWFLAKE" TO ROLE <YOUR_ROLE>;

GRANT USAGE ON SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON "DATABASE_STORAGE_USAGE_HISTORY" IN SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON "QUERY_HISTORY" IN SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON "STAGES" IN SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON "STORAGE_USAGE" IN SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;

GRANT SELECT ON "WAREHOUSE_METERING_HISTORY" IN SCHEMA "SNOWFLAKE"."ACCOUNT_USAGE" TO ROLE <YOUR_ROLE>;Deploy the Snowflake Performance and Consumption SpotApp

After you complete the prerequisites, you are ready to deploy the Snowflake Performance and Consumption SpotApp and begin leveraging its pre-built content.

To deploy the Snowflake Performance and Consumption SpotApp, refer to Deploying SpotApps.

Snowflake Performance and Consumption SpotApp schema

The following table describes the schema for the Snowflake Performance and Consumption SpotApp.

| Table | Column name | Column type | Required column |

|---|---|---|---|

QUERY_HISTORY |

QUERY_ID |

VARCHAR |

Y |

QUERY_HISTORY |

QUERY_TEXT |

VARCHAR |

Y |

QUERY_HISTORY |

DATABASE_ID |

NUMBER |

Y |

QUERY_HISTORY |

DATABASE_NAME |

VARCHAR |

Y |

QUERY_HISTORY |

SCHEMA_ID |

NUMBER |

N |

QUERY_HISTORY |

SCHEMA_NAME |

VARCHAR |

Y |

QUERY_HISTORY |

QUERY_TYPE |

VARCHAR |

Y |

QUERY_HISTORY |

SESSION_ID |

NUMBER |

N |

QUERY_HISTORY |

USER_NAME |

VARCHAR |

Y |

QUERY_HISTORY |

ROLE_NAME |

VARCHAR |

Y |

QUERY_HISTORY |

WAREHOUSE_ID |

NUMBER |

Y |

QUERY_HISTORY |

WAREHOUSE_NAME |

VARCHAR |

Y |

QUERY_HISTORY |

WAREHOUSE_SIZE |

VARCHAR |

Y |

QUERY_HISTORY |

WAREHOUSE_TYPE |

VARCHAR |

N |

QUERY_HISTORY |

QUERY_TAG |

VARCHAR |

N |

QUERY_HISTORY |

EXECUTION_STATUS |

VARCHAR |

N |

QUERY_HISTORY |

ERROR_CODE |

VARCHAR |

Y |

QUERY_HISTORY |

ERROR_MESSAGE |

VARCHAR |

Y |

QUERY_HISTORY |

START_TIME |

TIMESTAMP |

Y |

QUERY_HISTORY |

ROWS_PRODUCED |

NUMBER |

N |

QUERY_HISTORY |

PARTITIONS_SCANNED |

NUMBER |

N |

QUERY_HISTORY |

PARTITIONS_TOTAL |

NUMBER |

N |

QUERY_HISTORY |

PERCENTAGE_SCANNED_FROM_CACHE |

FLOAT |

N |

QUERY_HISTORY |

BYTES_SPILLED_TO_LOCAL_STORAGE |

NUMBER |

N |

QUERY_HISTORY |

BYTES_SPILLED_TO_REMOTE_STORAGE |

NUMBER |

N |

QUERY_HISTORY |

QUEUED_REPAIR_TIME |

NUMBER |

Y |

QUERY_HISTORY |

QUEUED_OVERLOAD_TIME |

NUMBER |

Y |

QUERY_HISTORY |

QUEUED_PROVISIONING_TIME |

NUMBER |

Y |

QUERY_HISTORY |

TOTAL_ELAPSED_TIME |

NUMBER |

Y |

QUERY_HISTORY |

COMPILATION_TIME |

NUMBER |

N |

QUERY_HISTORY |

EXECUTION_TIME |

NUMBER |

Y |

WAREHOUSE_METERING_HISTORY |

WAREHOUSE_ID |

NUMBER |

Y |

WAREHOUSE_METERING_HISTORY |

WAREHOUSE_NAME |

VARCHAR |

Y |

WAREHOUSE_METERING_HISTORY |

START_TIME |

TIMESTAMP |

Y |

WAREHOUSE_METERING_HISTORY |

CREDITS_USED |

NUMBER |

Y |

WAREHOUSE_METERING_HISTORY |

CREDITS_USED_COMPUTE |

NUMBER |

Y |

WAREHOUSE_METERING_HISTORY |

CREDITS_USED_CLOUD_SERVICES |

NUMBER |

Y |

WAREHOUSE_METERING_HISTORY |

END_TIME |

TIMESTAMP |

N |

STAGES |

STAGE_ID |

NUMBER |

N |

STAGES |

STAGE_NAME |

VARCHAR |

Y |

STAGES |

STAGE_SCHEMA_ID |

NUMBER |

N |

STAGES |

STAGE_SCHEMA |

VARCHAR |

Y |

STAGES |

STAGE_CATALOG_ID |

NUMBER |

N |

STAGES |

STAGE_CATALOG |

VARCHAR |

Y |

STAGES |

STAGE_TYPE |

VARCHAR |

Y |

STAGES |

STAGE_OWNER |

VARCHAR |

Y |

STAGES |

DELETED |

TIMESTAMP |

Y |

STORAGE_USAGE |

USAGE_DATE |

DATE |

Y |

STORAGE_USAGE |

FAILSAFE_BYTES |

NUMBER |

Y |

STORAGE_USAGE |

STAGE_BYTES |

NUMBER |

Y |

STORAGE_USAGE |

STORAGE_BYTES |

NUMBER |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

USAGE_DATE |

DATE |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

DATABASE_ID |

NUMBER |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

DATABASE_NAME |

VARCHAR |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

DELETED |

TIMESTAMP |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

AVERAGE_DATABASE_BYTES |

FLOAT |

Y |

DATABASE_STORAGE_USAGE_HISTORY |

AVERAGE_FAILSAFE_BYTES |

FLOAT |

Y |